Dr. RAG and Mr. HyDE

Retrieval Augmented Generation (RAG) is one of the oft-used use cases for an LLM. I am pretty sure quite a few of you must be using it or creating applications that use it in your organization.

RAG involves taking in documents, chunking them, generating embedding vectors for them. When you query, it performs a similarity search of the chunked documents comparing the vectors, retrieves the relevant text chunks and passes them on to the LLM. The LLM then ‘Generates’ a response which is ‘Augmented’ by documents ‘Retrieved’ by the similarity search, therefore RAG.

But often, you notice that just a simple vector search is not always the best approach for getting similar documents. There are many methods to enhance the similarity search results such as document selection by relevance, reranking the results before passing it to an LLM and using fine-tuned models in some cases.

But here we are just going to focus on HyDE. HyDE stands for “Hypothetical Document Embeddings” and it is described in detail, along with benchmarks, in this paper : “Precise Zero-Shot Dense Retrieval without Relevance Labels“.

Though the name can be intimidating, the concept behind this is simple. Rather than using the user query to do a similarity search, the user query, instead is sent to an LLM and it is asked to generate an answer as if it has all the information required to answer the question. The response returned is then used to perform a similarity search of the embeddings instead of the original query.

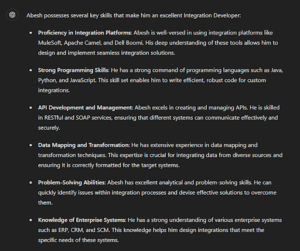

Why does this work ? Let’s take a use case. Suppose I build a RAG enabled bot and feed it my resume. If I then ask the bot “What skills does Abesh have that makes him a good Integration developer ?”, the similarity search results for that will not be as accurate if I feed this to HyDE. With HyDE, I would pass on this question to the LLM and ask it to generate a response. The response would be somewhat like the image below.

Now if you look at the response above, you will see that a lot of the information generated is false in my case. But that does not matter. The response generates more keywords that are most likely to be in the answer and that is exactly what might lead to a better similarity search and better RAG response rather than just sending in the basic user input.

If you also look at the findings of the paper linked above, you will see that in most cases it increases the accuracy of retrieval though it might not work for all cases.

As with LLMs, there is no “one ring to rule them all”. So try out this simple trick and let me know if it leads to more favorable responses for your RAG pipeline 🙂

If you want to deploy Chatbots that utilize RAG with your organizational data on BTP in a fast and secure fashion, do not forget to reach out to us ! Our RAG framework uses HyDE and quite a few other optimizations that will help you establish a pipeline faster than usual !